AI and privacy protection are two topics that are increasingly intertwined in today’s digital world. With the rise of artificial intelligence (AI) and machine learning (ML), the need for online privacy protection has become more important than ever. AI technology has the ability to analyze vast amounts of data, including personal information, and use it to make decisions and predictions about individuals.

One of the main reasons why AI matters in online privacy protection is that it has the potential to both help and harm individuals. On one hand, AI can be used to create more robust and effective privacy protection measures, such as identifying and blocking malicious online activity, detecting phishing attempts, and flagging suspicious behavior. On the other hand, AI can also be used to invade individuals’ privacy, such as by unauthorized data collection and analyzing personal data without consent, including facial recognition data, or using that data to make decisions that could negatively impact individuals’ lives. Given the potential risks and benefits associated with AI, privacy protection, and privacy rights, it is important for individuals and organizations to stay informed about the latest developments in this field. By understanding how AI is being used to protect or invade privacy, individuals can better protect themselves and their personal information online. Additionally, policymakers and industry leaders must work together to develop ethical and transparent guidelines for the use of AI in privacy protection in order to ensure that these technologies are used responsibly and for the benefit of all.

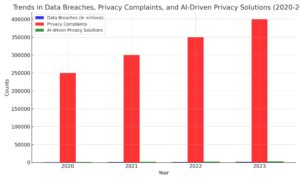

| Year | Data Breaches (in millions) | Privacy Complaints | AI-driven Privacy Solutions Deployed |

|---|---|---|---|

| 2020 | 1000 | 250,000 | 1500 |

| 2021 | 1200 | 300,000 | 2000 |

| 2022 | 1500 | 350,000 | 2500 |

| 2023 | 1800 | 400,000 | 3200 |

Key points from the table:

- Data breaches are increasing each year.

- More people are complaining about privacy issues.

- Use of AI to protect privacy is growing.

The Role of AI in Enhancing Online Privacy

As the amount of personal data generated online continues to grow, protecting online privacy has become a more significant concern. AI has emerged as a powerful tool in enhancing online privacy and data security. AI-driven privacy solutions can help detect and prevent privacy violations while also ensuring that data is used ethically and transparently.

AI-Driven Privacy Solutions

AI systems can be used to analyze large amounts of data and identify patterns that may indicate a privacy violation. For example, machine learning algorithms can be trained to detect suspicious activity on a network or identify potential data breaches. AI can also be used to protect privacy by design by ensuring that data is collected and processed in a way that minimizes the risk of privacy violations. One of the most promising AI-driven privacy solutions is synthetic data. Synthetic data is artificially generated data that mimics real data but does not contain any personal information. Synthetic data can be used to train machine learning algorithms without risking the privacy of real individuals. This approach can help reduce the risk of privacy violations while still allowing for the development of powerful AI systems.

Challenges and Risks of AI in Privacy

While AI can be a powerful tool in enhancing online privacy, it also presents several challenges and risks. One of the main challenges is ensuring that AI systems are designed and implemented in a way that protects privacy. Privacy by design is an approach that emphasizes privacy throughout the entire design and development process. This approach can help ensure that AI systems are designed with privacy in mind from the outset. Another challenge is ensuring that AI systems are transparent and accountable. AI systems can be complex and difficult to understand, which can make it difficult to identify privacy violations. It is important to ensure that AI systems are transparent and accountable so that individuals can understand how their data is being used and hold companies accountable for any privacy violations.

Regulatory Landscape for AI and Privacy

Global Privacy Regulations

As AI technology continues to advance, governments around the world are grappling with how to regulate its use in the context of privacy protection.

The European Union’s General Data Protection Regulation (GDPR) is the most comprehensive privacy regulation to date. It has strict requirements for data protection impact assessments and the right to be forgotten. The GDPR has set a global standard for privacy protection, with many countries adopting similar regulations.

The United States has taken a different approach, with the Federal Trade Commission (FTC) focusing on enforcing existing laws rather than creating new regulations specific to AI. However, there have been calls for a comprehensive federal privacy law to address the unique challenges posed by AI technology. Other countries, such as Canada and Japan, have also introduced privacy regulations that address AI specifically.

In Canada, the Personal Information Protection and Electronic Documents Act (PIPEDA) has been updated to include provisions for AI, while Japan’s Act on the Protection of Personal Information (APPI) has established guidelines for the use of AI in data processing.

AI Governance and Accountability

In addition to privacy regulations, there is also a growing focus on AI governance and accountability. The Algorithmic Accountability Act, introduced in the United States in 2019, would require companies to assess the impact of their algorithms on privacy, fairness, and bias. The proposed legislation would also require companies to conduct regular audits of their algorithms and provide transparency in their decision-making processes. The California Consumer Privacy Act (CCPA) also includes provisions for AI governance, requiring companies to disclose the use of AI and provide a description of their decision-making processes. The CCPA also requires companies to conduct regular risk assessments of their AI systems and provide training to employees on the use of AI.

Ethical Considerations and Societal Impact

As AI continues to play a bigger role in decision-making, ethical concerns have become increasingly important. Three major areas of ethical concern for society are privacy and surveillance, bias and discrimination, and the role of human judgment.

Bias and Discrimination in AI

One of the most pressing ethical concerns in AI is the issue of bias and discrimination. AI models are only as unbiased as the data they are trained on, and if the data is biased, then the AI model will be biased as well. This can lead to unfair treatment of certain groups of people, perpetuating existing biases and discrimination. Therefore, it is important to ensure that the data used to train AI models is diverse and representative of all groups in society.

Transparency and Trust in AI Systems

Another ethical concern with AI is the lack of transparency and trust in AI systems. As AI becomes more complex, it becomes increasingly difficult to understand how it makes decisions. This lack of transparency can lead to distrust in AI systems, which can have serious consequences for society. To address this concern, it is important to ensure that AI systems are transparent and that people can understand how they work. This will help to build trust in AI systems and ensure that they are used in a responsible and ethical manner. Accountability is another important aspect of transparency and trust. AI systems must be accountable for their decisions, and there must be a mechanism in place to hold them responsible for any harm they may cause. This will help to ensure that AI systems are used in a fair and ethical manner and that they do not perpetuate existing biases and discrimination.

Future Directions in AI for Online Privacy

As AI technology continues to advance, so does its potential to improve online privacy protection. Here are a few possible directions for the future of AI in this field:

Advancements in AI Technology

As data privacy concerns continue to grow, AI technology is likely to become more sophisticated in its ability to detect and prevent data breaches. One area of focus is the development of more advanced deep learning algorithms that can analyze large volumes of data in real time, helping to identify and mitigate potential threats before they become a problem. Another area of focus is the development of a more secure infrastructure for storing and transmitting data. This could involve using blockchain technology, which offers a high level of security and transparency, or developing more advanced encryption methods that are resistant to hacking and other types of malicious attacks.

The Importance of Data Management

Effective data management is essential for ensuring the trustworthiness of AI systems used for online privacy protection. This involves collecting and analyzing data and ensuring that it is accurate, relevant, and up-to-date. One approach to data management is the use of big data analytics, which can help organizations identify patterns and trends in large volumes of data. This can be particularly useful for detecting potential security threats or other types of data breaches. Another approach is the use of data science methodologies, which can help organizations to better understand the underlying patterns and relationships in their data. This can be particularly useful for identifying potential vulnerabilities in their systems and developing more effective strategies for protecting against them.

Frequently Asked Questions

How can AI enhance the protection of personal data online?

AI can enhance the protection of personal data online by identifying potential security breaches and detecting anomalous behavior. It can also be used to encrypt sensitive information and monitor network traffic for potential threats. AI can analyze large amounts of data in real time, allowing for quick identification and response to potential security incidents. By automating routine tasks, AI can also reduce the risk of human error in data management.

What are the potential risks of using AI in personal data management?

The potential risks of using AI in personal data management include data breaches, cyber-attacks, and privacy violations. AI systems can be vulnerable to attacks and can also be used to perpetrate attacks. Additionally, AI algorithms may not always be accurate, which could lead to incorrect decisions being made about personal data. There is also a risk that AI systems may be used to discriminate against individuals based on their personal data.

In what ways does AI contribute to data security and breach prevention?

AI contributes to data security and breach prevention by providing real-time threat detection, automated incident response, and continuous monitoring of network traffic. AI can also be used to identify and mitigate vulnerabilities in software and hardware systems. By analyzing large amounts of data, AI can identify patterns and anomalies that may indicate a security breach or attack.

How do AI technologies comply with existing data privacy regulations?

AI technologies must comply with existing data privacy regulations, such as the European Union’s General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA). AI systems must be transparent and accountable, with clear explanations of how personal data is being collected, processed, and used. Additionally, AI systems must be designed with privacy in mind, with appropriate safeguards in place to protect personal data.

What ethical considerations arise from employing AI in information assurance?

Employing AI in information assurance raises ethical considerations around transparency, accountability, and fairness. AI systems must be transparent, with clear explanations of how personal data is being collected and used. They must also be accountable, with mechanisms in place to ensure that decisions made by AI systems are fair and unbiased. Additionally, ethical considerations arise around the use of personal data, with a need to balance the benefits of using personal data with the risks to individual privacy.

How can transparency and accountability be maintained when AI is used for privacy protection?

Transparency and accountability can be maintained when AI is used for privacy protection by providing clear explanations of how personal data is being collected, processed, and used. This includes giving individuals access to their personal data and allowing them to control how it is used. Additionally, AI systems must be designed with privacy in mind, with appropriate safeguards in place to protect personal data. Finally, mechanisms must be in place to ensure that decisions made by AI systems are fair and unbiased.